How to Read Economics Research Papers: Randomized Controlled Trials (RCTs)

Course Outline

How to Read Economics Research Papers: Randomized Controlled Trials (RCTs)

This video shows how to read economics research papers that use randomized trials (sometimes called randomized controlled trials or randomized clinical trials or RCTs). The video expands on our introduction to randomized trials, which features a study of the effects of classroom electronic use on student learning in econ 101 classrooms (research paper).

We begin here with the interpretation of tables of descriptive statistics and statistical tests for balance between treatment and control groups. We then see how to read the key findings in a published paper of this sort, and learn why regression is used with randomized controlled trials.

Learn to decode the work of the masters!

Teacher Resources

Related to this course

See all Teacher Resources related to this course

Transcript

On his quest to master econometrics, Grasshopper Kamal has made great progress, stretching his capabilities and outsmarting his foes. Alas, today he's despondent, for one challenge remains unmet. Kamal cannot yet decode the scriptures of academic research -- journals like "The American Economic Review" and "Econometrica." These seemed to him to be inscribed in an obscure foreign tongue.

Ugh, what the... ?

These volumes are opaque to the novice, Kamal, but can be deciphered with study. Let us learn to read them together.

Let's dive into the West Point study, published in the "Economics of Education Review." This paper reports on a randomized evaluation of student electronics use in Economics 101 classrooms. First, a quick review of the research design.

Okay.

Metrics masters teaching at West Point, the military college that trains American Army officers designed a randomized trial to answer this question. These masters randomly assigned West Point cadets into Economics classes operating under different rules. Unlike most American colleges, the West Point default is no electronics.

For purposes of this experiment, some students were left in such traditional technology-free classes -- no laptops, no tablets and no phones! This is the control group, or baseline case.

Another group was allowed to use electronics. This is the treatment group, subject to a changed environment. The treatment in this case is the unrestricted use of laptops or tablets in class. Every causal question has a clear outcome—the variables we hope to influence defined in advance of the study.

The outcomes in the West Point electronics study are final exam scores. The study seeks to answer the following question: What is the causal effect of classroom electronics on learning as measured by exam scores?

Economics journal articles usually begin with a table of descriptive statistics, giving key facts about the study sample.

Oh my gosh, I remember this table—so confusing!

Columns 1 to 3 report mean, or average, characteristics. These give a sense of who we're studying. Let's start with column 1 which describes covariates in the control group. Covariates are characteristics of the control and treatment groups measured before the experiment begins. For example, we see the control group has an average age a bit over 20. Many of these covariates are dummy variables. A dummy variable can only have two values—a zero or a one.

For example, student gender is captured by a dummy variable that equals one for women and zero for men. The mean of this variable is the proportion female. We also see that the control group is 13% Hispanic and 19% had prior military service. The table notes are key. Refer to these as you scan the table. These notes explain what's shown in each column and panel.

The notes tell us, for example, that standard deviations are reported in brackets. Standard deviations tell us how spread out the data are. For example, a standard deviation of 0.52 tells us that most of the control group's GPAs fall between 2.35, which is 0.52 below the mean GPA of 2.87, and 3.39, which is 0.52 above 2.87. A lower standard deviation would mean the GPAs were more tightly clustered around the mean.

Yeah, but they're missing for most of the variables.

That's right. Masters usually omit standard deviations for dummies because the mean of this variable determines its standard deviation. This study compares two treatment groups with the control group. The first was allowed free use of laptops and tablets. The second treatment was more restrictive, allowing only tablets placed flat on the desk. The treatment groups look much like the control group.

This takes us to the next feature of this table, columns 4 through 6 use statistical tests to compare the characteristics of the treatment and control group before the experiment. In column 4, the two treatment groups are combined. You can see that the difference in proportion female between the treatment and control group is only 0.03. The difference is not statistically significant -- it is the sort of difference we can easily put down to chance results in our sample selection process. -

Hmm, how do we know that?

Remember the rule of thumb? Statistical estimates that exceed the standard error by a multiple of 2 in absolute value are usually said to be statistically significant. The standard error is 0.03, same as the difference in proportion female. So the ratio of the latter to the former is only 1, which, of course, is less than 2.

Uh-huh! So none of the treatment/control differences in the table are more than twice their standard errors.

Correct. The random division of students appears to have succeeded in creating groups that are indeed comparable. We can be confident, therefore, that any later differences in classroom achievement are the result of the experimental intervention rather than a reflection of preexisting differences. Ceteris paribus achieved!

Cool. Wait, what about the bottom, the numbers with the stars? Those differences are a lot more than double the standard error.

Good eye, Kamal! The table has many numbers. Those in Panel B are important too. This panel measures the extent to which students in treatment and control groups actually use computers in class.

The treatment here was to allow computer use. The researchers must show that students allowed to use computers took advantage of the opportunity to do so. If they didn't, then there's really no treatment. Luckily, 81% of those in the first treatment group used computers compared with none in the control group. And many in the second tablet treatment group used computers as well. These differences in computer use are large and statistically significant. We also get to see the sample size in each group.

The stars are just like decoration?

Some academic papers use stars to indicate differences that are statistically significant. This makes them jump out at you. Here, three stars indicate that the result is statistically different from zero with a p-value less than 1%. In other words, there's less than a 1 in 100 chance this result is purely a chance finding. Two stars indicate a 1 in 20 or 5% chance of a chance finding. And one star denotes results we might see as often as 10% of the time merely due to chance. Today, stars are seen as a little old fashioned. Some journals omit them.

What about those last two columns?

Unlike column 4, which combines both treatment groups into one, these last two columns look separately at treatment/control differences for each treatment group. This provides a more detailed analysis of balance. Also, for now, you can ignore this row which provides another test of significance. Now we get to the article's punchline, table 4. This table reports regression estimates of the effects of electronics use on measures of student learning.

Why does the study report regression estimates? See, that's why I was getting lost. I thought one reason why we liked randomized trials is that we use them to obtain causal effects simply by comparing treatment and control groups. Since these groups are balanced, no need to use regression.

Well said, Kamal. In practice, it's customary to report regression estimates for two reasons. First, evidence of balance notwithstanding, an abundance of caution might lead the analyst to allow for chance differences. Second, regression estimates are likely to be more precise -- that is, they have lower standard errors than the simple treatment control comparisons.

The dependent variable in this study is the outcome of interest. Since the question at hand is how classroom electronics affect learning, a good outcome is the Economics final exam score. Each column reports results from a different regression model. Models are distinguished by the control variables or covariates they include besides treatment status. Estimates with no covariates are simple comparisons of treatment and control groups.

I thought they just forgot to fill it out.

Column 1 suggests electronics use reduced final exam scores by 0.28 standard deviations. In our last lesson, Master Joshway explained that we use standard deviation units because these units are easily compared across studies. Column 2 reports results from a model that adds demographic controls. Here, we're comparing test scores but holding constant factors such as age and sex. Column 3 reports results from a model that adds GPA to the list of covariates. Column 4 adds ACT scores.

Analysts often report results this way, starting with models that include few or no covariates and then reporting estimates from models that add more and more covariates as we move across columns. Looking across columns, what do you notice?

Well, the coefficient on using a computer is always a pretty big negative number.

That's right! We can also see that the standard errors are small enough to make these negative results statistically significant. In other words, the primary takeaway from this experiment is that electronics in the classroom reduce student learning.

GPA and ACT scores are also significant. Why is that?

Good observation! That's not surprising. We expect these variables to predict college performance.

Oh right, of course. Kids who got better grades before are more likely to get a better grade in this course.

You'll also notice a lot of other information on this table. Remaining panels in the table report effects of electronics use on components of the final exam, such as the multiple choice questions. These results are mostly consistent with computer use effects on overall scores.

What about the rows not in English?

These rows give additional statistical information. R-squared is a measure of goodness of fit. This isn't too important, though some readers may want to know it. Other rows report on alternative tests of statistical significance that you can ignore for now.

Oh my gosh, these tables aren't that hard! Thank you so much.

Next up is regression. See you then!

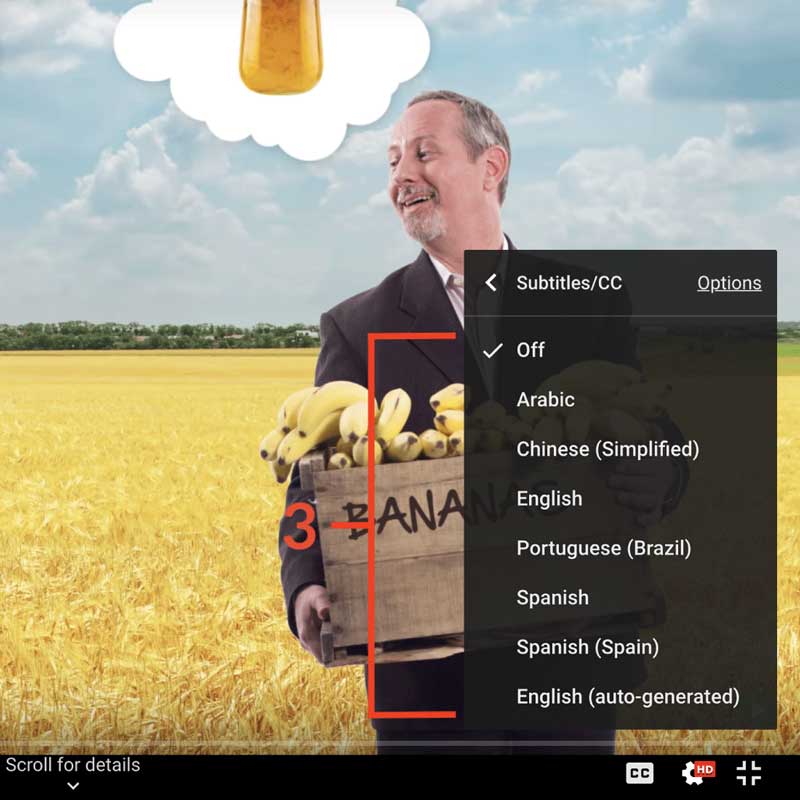

Subtitles

- English

- Spanish

- Chinese

Thanks to our awesome community of subtitle contributors, individual videos in this course might have additional languages. More info below on how to see which languages are available (and how to contribute more!).

How to turn on captions and select a language:

- Click the settings icon (⚙) at the bottom of the video screen.

- Click Subtitles/CC.

- Select a language.

Contribute Translations!

Join the team and help us provide world-class economics education to everyone, everywhere for free! You can also reach out to us at [email protected] for more info.

Submit subtitles

Accessibility

We aim to make our content accessible to users around the world with varying needs and circumstances.

Currently we provide:

- A website built to the W3C Web Accessibility standards

- Subtitles and transcripts for our most popular content

- Video files for download

Are we missing something? Please let us know at [email protected]

Creative Commons

This work is licensed under a Creative Commons Attribution-NoDerivatives 4.0 International License.

The third party material as seen in this video is subject to third party copyright and is used here pursuant

to the fair use doctrine as stipulated in Section 107 of the Copyright Act. We grant no rights and make no

warranties with regard to the third party material depicted in the video and your use of this video may

require additional clearances and licenses. We advise consulting with clearance counsel before relying

on the fair use doctrine.