Introduction to Randomized Trials

Course Outline

Introduction to Randomized Trials

MIT’s Josh Angrist introduces us to our most powerful weapon: randomized trials!

Randomized trials originate in medical research, where they’re called “randomized clinical trials” or “RCTs.” That’s why randomized trials are said to measure “treatment effects”.

Along the way, Master Joshway explains how a randomized trial conducted at the US Military Academy reveals the hidden costs of classroom electronics. In this video, we cover the following:

- The difference between control and treatment groups

- Checking for balance

- The Law of Large Numbers

- Treatment effects

- Standard errors and statistical significance

Teacher Resources

Related to this course

See all Teacher Resources related to this course

Transcript

The path from cause to effect is dark and dangerous, but the weapons of Econometrics are strong. Behold the most powerful, the sword of random assignment, which cuts to the heart of causal questions. We begin with our most powerful and costliest weapon -- randomized trials. . -

Every ‘metrics mission begins with a causal question. Clear questions lead to clear answers. The clearest answers come from randomized trials. Let's see how and why randomized trials provide especially convincing answers to causal questions.

Like a finely honed sword, randomized trials cut to the heart of a causal problem, creating convincing apples- to-apples comparisons. Yet like any finely made weapon, randomized trials are expensive and cannot be done quickly.

Randomized trials originate in medical research where they're called randomized clinical trials or RCTs. The U.S. Food and Drug Administration requires drug manufacturers to establish the safety and efficacy of new drugs or medical treatments. They do this through a series of RCTs. That's why randomized trials are said to measure treatment effects.

You may have contributed to another kind of randomized trial -- the A/B tests Silicon Valley companies use to compare marketing strategies. For example, Amazon randomizes search results in a constant stream of hidden experiments.

Randomized trials are also important in education research. They've been used to answer a causal question near and dear to my beating teacher's heart -- should laptops and other electronic devices be allowed in the classroom? Many see classroom electronics as a learning aid. But others, like me, think they're a damaging distraction. Who's right?

‘Metrics masters teaching at West Point, the military college that trains American Army officers, designed a randomized trial to answer this question. These masters randomly assign West Point cadets into economics classes operating under different rules.

Unlike most American colleges, the West Point default is no electronics. For purposes of this experiment, some students were left in such traditional technology-free classes -- no laptops, no tablets, and no phones! This is the control group, or baseline case. Another group was allowed to use electronics. This is the treatment group, subject to a changed environment. The treatment in this case is the unrestricted use of laptops or tablets in class.

Every causal question has a clear outcome -- the variables we hope to influence, defined in advance of the study. The outcomes in the West Point electronics study are final exam scores. The study seeks to answer the following question -- what is the causal effect of classroom electronics on learning as measured by exam scores?

West Point economics students were randomly assigned to either the treatment or control groups. Random assignment creates ceteris paribus comparisons, allowing us to draw causal conclusions by comparing groups. The causality-revealing power of a randomized trial comes from a statistical property called the law of large numbers. When statisticians and mathematicians uncover something important and reliably true about the natural world, they call it a law. The law of large numbers says that when the groups randomized are large enough, the students in them are sure to be similar on average, in every way. This means that groups of students randomly divided can be expected to have similar family background, motivation, and ability. Sayonara selection bias, at least in theory.

In practice, the groups randomized might not be large enough for the law of large numbers to kick in. Or the researchers might have messed up the random assignment. As in any technical endeavor, even experienced masters are alert for a possible foul-up. We therefore check for balance, comparing student background variables across groups to make sure they indeed look similar.

Here's the balance check for West Point. This table has two columns -- one showing data from the control group and one showing data from the treatment group. The rows list a few of the variables that we hope are balanced -- sex, age, race, and high school GPA, among others. The first row indicates what percentage of each group is female. It's 17% for the control group and 20% for the treatment group. Kamal, how does balance look for GPA? “Controls have a GPA of 2.87, while treated have a GPA of 2.82, pretty close.”

Happily, the two groups look similar all around. “How big does our sample have to be for the law of large numbers to kick in?” The West Point study, which involved about 250 students in each group, is almost certainly big enough. There are no hard and fast rules here. In another video, you'll learn how to confirm the hypothesis of group balance with formal statistical tests.

The heart of the matter in this table is the estimated treatment effect. Remember, the treatment in this case is permission to use electronics in class. Treatment effects compare averages between control and treatment groups. The group Allowed Classroom Electronics had an average final exam score 0.28 standard deviations below the score for students in the control group. How big an effect is this?

Social scientists measure test scores in standard deviation units because these units are easily compared across studies. We know from a long history of research on classroom learning that 0.28 is huge. A decline of 0.28 is like taking the middle student in the class and pushing him or her down to the bottom third.

How certain can we be that these large results are meaningful? After all, we're looking at a single randomized division between treatment and control groups. Other random splits might have produced something different.

We therefore quantify the sampling variance in estimates of causal effects. “What is sampling variance?” Sampling variance tells us how likely a particular statistical finding is to be a chance result, rather than indicative of an underlying relationship. Sampling variance is summarized by a number, called the standard error of the estimated treatment effect. Don't worry -- we'll cover this important idea in depth later. Just remember the smaller the standard error, the more conclusive the findings.

On the other hand, if the standard error is large relative to the effect we're trying to estimate, there's a pretty good chance we'd get something different if we were to rerun the experiment. You can think of the standard error as a way to gauge how much we can trust the result we're seeing.

In this study, the relevant standard error is 0.1. For now, it's enough to learn a simple rule of thumb. When an estimated treatment effect is more than double its standard error, the odds this non-zero result is due to chance are very low, roughly 1 in 20. Because this is so unlikely, we say that estimates that are two or more times larger than the associated standard errors are statistically significant.

Camilla, is the treatment effect in the West Point study statistically significant? “The standard error is 0.10 and the treatment effect is 0.28, so 0.28 is more than two times larger than 0.10, so yeah.” Correct. The lost learning caused by electronics use in Econ 101 is therefore both large and statistically significant.

Randomized trials usually provide the most convincing answers to causal questions. When this weapon is in our tool kit, we use it. Random assignment allows us to claim ceteris has indeed been made paribus. But randomized trials could be tough to organize. They may be expensive, time-consuming, and, in some cases, considered unethical. So masters look for convincing alternatives.

The alternatives try to mimic the causality revealing power of a randomized trial but without the time, trouble, and expense of a purpose-built experiment. These alternative tools are applied in real world scenarios that mimic random assignment

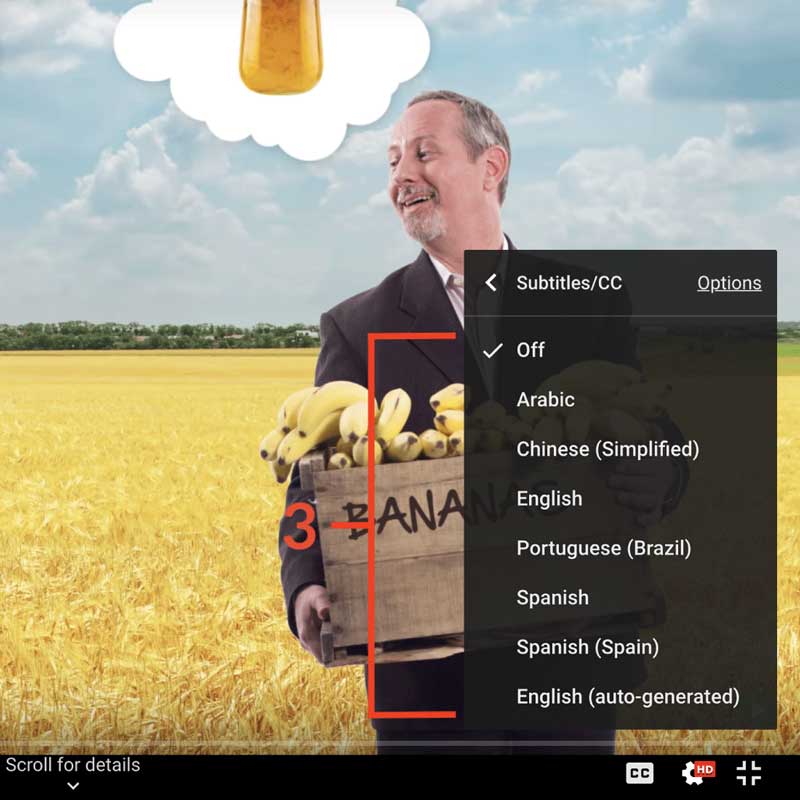

Subtitles

- English

- Spanish

- Chinese

Thanks to our awesome community of subtitle contributors, individual videos in this course might have additional languages. More info below on how to see which languages are available (and how to contribute more!).

How to turn on captions and select a language:

- Click the settings icon (⚙) at the bottom of the video screen.

- Click Subtitles/CC.

- Select a language.

Contribute Translations!

Join the team and help us provide world-class economics education to everyone, everywhere for free! You can also reach out to us at [email protected] for more info.

Submit subtitles

Accessibility

We aim to make our content accessible to users around the world with varying needs and circumstances.

Currently we provide:

- A website built to the W3C Web Accessibility standards

- Subtitles and transcripts for our most popular content

- Video files for download

Are we missing something? Please let us know at [email protected]

Creative Commons

This work is licensed under a Creative Commons Attribution-NoDerivatives 4.0 International License.

The third party material as seen in this video is subject to third party copyright and is used here pursuant

to the fair use doctrine as stipulated in Section 107 of the Copyright Act. We grant no rights and make no

warranties with regard to the third party material depicted in the video and your use of this video may

require additional clearances and licenses. We advise consulting with clearance counsel before relying

on the fair use doctrine.